[Customer case] NTTPC Co., Ltd. Amazon EC2 INF1 instance, posture estimation service cost -optimization initiative

On October 14, 2021, we held an event on the Amazon EC2 event "Amazon EC2 Large Utilization -Latest Lineup, Cost Performance Optimization, Introduction Cases, etc."Fifteen years since the launch of EC2 services in 2006, the AWS provided by AWS has a wide variety of types of EC2 instances, but in order to optimize cost performance, AWS's proprietary ARM processor AWS GRAVITON2 and AWS INFERENTIA.We provide instances equipped with inference chips.At this event, he was a Cyber Agent Co., Ltd. and NTTPC Communications Co., Ltd.

For the entire event, please refer to the blog of the seminar [Amazon EC2 Large Utilization -Latest Lineup, Cost Performance Optimization, Advanced Customer Cases, etc.

In this blog, from the announcement of the event, NTTPC Communications Co., Ltd. focuses on Amazon EC2 INF1 instances with the purpose of cost optimization in the estimated posture API service (AnyMotion), comparing it to other EC2 instances.I will introduce the activities that have been verified.

Verification of inference INF1 instance in the estimated posture API service

NTTPC Communications Co., Ltd. Toshiki Takazawa

Site: [Slide]

NTTPC Communications provides an estimated posture API service, and uses AWS for infrastructure.This time, we focused on the INF1 instance as an instance to operate the inference model, compared with the GPU -equipped instance, Amazon EC2 P3 instance, Amazon EC2 G4 instance, and Amazon Elastic Inferne, and reported the verification contents.

Stock estimation API service Anymotion

Anymotion is a service for AI to estimate the position of human joints from videos and images taken with the camera and visualize physical movements through the function of analyzing skeletal data.In areas that have been relying on people's senses and visual visual visual visuals, reporting such as coaching and posture checks based on quantitative data will be possible.By providing this service as an API for companies that develop applications, the application side can evaluate and judge using analysis results, and use it to return reports and feedback to end users.He said he was considering it in various industries such as health care, entertainment, and rehabilitation.

Assignments in AI service operation

Reducing operation costs is an important consideration for operating AI services.Considering the scaling of the service, it was expected that the cost of the inference part accounted for more than 50% of the entire infrastructure cost, and it was expected to be particularly large.rice field.There are various inference instances provided by AWS, but GPU (G4DN and P3) instances, CPU (Amazon EC2 C5) instances, Amazon Elastic Inferences, which can be expected to optimize cost performance during operation.Comparison verification was conducted.

What are Amazon EC2 INF1 and AWS Inferentia?

The Amazon EC2 INF1 instance is an instance equipped with AWS Inferentia, a machine learning inferment, which is designed by AWS to speed up the workload of machine learning inference and optimize costs. The INF1 instance has a maximum of 2.3 times the throughput and a maximum of 70% of the price per rhoming process compared to the GPU instance. AWS Inferentia chips contain four Neuron core. Neuron core implements a high -performance syntric assortment queue, which greatly speeds up general deep learning operations such as folding and conversion. The Neuron core has a large on -chip cache, reducing external memory access by fastening the calculation results in Neuron core and the weighted parameters required for inference processing in the cache, reducing the latency. You can improve the throughput.

AWS originally designed machine learning inference chip AWS INFERENTIA

AWS Neuron, a software development kit compatible with AWS INFERENTIA, can be used native from machine learning frameworks such as TENSORFLOW, Pytorch, and Apache Mxnet.Consists of compilers, runtime, and profiling tools, you can perform high performance and low latency inference.The INF1 instance needs to compile the learned models in a Neuron core in advance, but can be compiled only by changing the code of a few lines.The INF1 instance is also used as an Amazon Alexa provided by Amazon as an infrastructure that supports Amazon Advertising, which provides advertising solutions, and contributes to cost performance optimization.

Verification content

In the verification of NTTPC, I used the TensorFlow model that is actually used for the AnyMotion service.It is a custom model with an estimated posture that estimates the human joint position and returns the coordinates for the input image data of 256 x 192.In the Inf1 instance, it is necessary to compile the learned models in advance by the Neuron compiler, but since a node that does not support the Neuron compiler has been found in a part of the custom model, the node is processed on the CPU.We had you proceed with the verification.

In machine learning processing, there are two different requirements according to the use case of the application.One is a case where high real -timeability is required, and it is required to meet the given latency conditions.The other is a case called batch inference, which is more efficient and high throughput.

The instances and verification results of each comparison verified this time are as follows.

| インスタンス | 推論バッチサイズ | スループット [Images/秒] | レイテンシー [ミリ秒] | 時間単価 [USD]※2 | コストパフォーマンス [Images/0.0001 USD] | ||

| P50 | P90 | ||||||

| Inf1 | Inf1.xlarge | 1 | 517.80 | 7.38 | 7.68 | 0.308 | 604.05 |

| Inf1.2xlarge | 1 | 568.97 | 7.46 | 7.80 | 0.489 | 418.87 | |

| GPU | g4dn.xlarge | 1 | 113.46 | 10.06 | 10.32 | 0.71 | 57.53 |

| p3.2xlarge | 1 | 170.88 | 8.40 | 8.66 | 4.194 | 14.67 | |

| Elastic Inference※1 | eia1.medium | 1 | 32.15 | 36.01 | 36.41 | 0.220 | 52.61 |

| eia1.large | 1 | 65.76 | 19.17 | 19.46 | 0.450 | 52.61 | |

| eia1.xlarge | 1 | 85.89 | 15.51 | 15.86 | 0.890 | 34.74 | |

| CPU | c5.2xlarge | 1 | 18.43 | 75.73 | 77.12 | 0.428 | 15.50 |

With a focus on real -time performance, and verification under the condition of the reasoning batch size to 1, the INF1 instance achieved a latency of 8 milliseconds or less.The cost performance at that time is a significantly separated other instance, and in comparison with the G4DN instance, the throughput of 4.5 times and the reasoning latency has been reduced by 25%, reducing costs by 90%.

| インスタンス | 推論バッチサイズ | スループット [Images/秒] | レイテンシー [ミリ秒] | 時間単価 [USD]※2 | コストパフォーマンス [Images/0.0001 USD] | ||

| P50 | P90 | ||||||

| Inf1 | Inf1.xlarge | 8 | 1011.87 | 38.24 | 39.38 | 0.308 | 1182.71 |

| Inf1.2xlarge | 8 | 1147.46 | 39.25 | 40.81 | 0.489 | 844.76 | |

| GPU | g4dn.xlarge | 64 | 224.63 | 464.14 | 496.92 | 0.71 | 113.90 |

| p3.2xlarge | 64 | 736.92 | 264.85 | 272.09 | 4.194 | 63.25 | |

| Elastic Inference※1 | eia1.medium | 8 | 67.79 | 148.56 | 150.70 | 0.220 | 110.93 |

| eia1.large | 32 | 201.2 | 304.06 | 446.77 | 0.450 | 160.96 | |

| eia1.xlarge | 32 | 300.69 | 238.69 | 244.39 | 0.890 | 121.63 | |

| CPU | c5.2xlarge | 16 | 20.59 | 868.74 | 896.24 | 0.428 | 17.32 |

In batch inference that emphasizes throughput performance, hardware accelerators such as GPU and Inferentia can increase usage efficiency by generally using large batch sizes.In the GPU (G4DN and P3) instance, the throughput was the largest value when the batch size was set to 64, whereas Inf1 instances were 100 % in the Inferentia when the batch size was 8.It has reached the value close to and achieves the maximum of throughput.Compared to the G4DN instance, it has also achieved 4.6 times throughput and 90% or more cost reductions.

Please refer to the details of NTTPC's verification announced this time.

summary

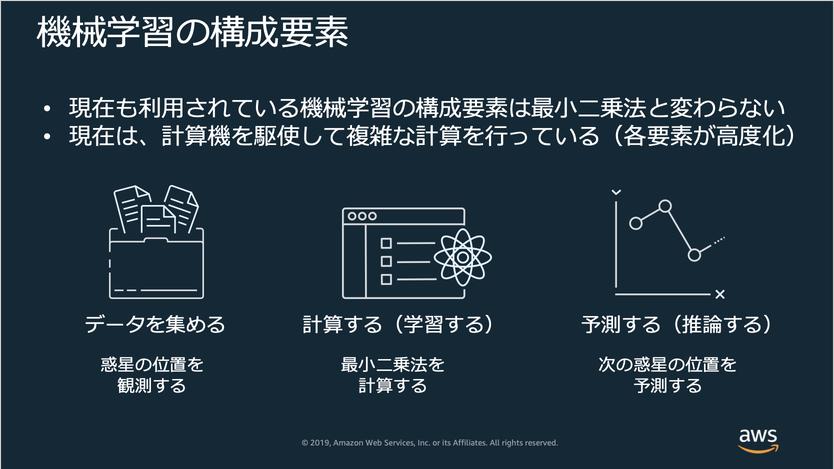

There are various requirements required for machine learning workloads.Whether the model to be handled is a conventional machine learning model such as XGBOOST, random forest, a deep learning model that requires hardware accelerators, or whether real -time performance is required, and process it in a batch inference.Is there no problem if the instance is dropped?Will the inference request always occur or sporadic?I think that we are also concerned about the ease of development from Time-to-Market and the development costs up to operation.This time, NTTPC has a large percentage of operating costs, which has a large proportion of inference processing, and verifies various instances around the Inf1 instance, and confirmed the high cost performance of the Inf1 instance.。

* For Amazon EC2 options in various requirements required for machine learning workloads, please refer to the Amazon EC2 machine learning workload option session within the event.

Many AWS customers, such as SNAP, Airbnb, and Sprinklr, use Amazon EC2 Inf1 instances to achieve high performance at low costs.We hope that the AWS customers who have seen this event and blog will be a hint to optimize the cost performance of inference workloads in the future.

Finally, I would like to thank NTTPC Communications Co., Ltd. for the cooperation of this event.Thank you very much for participating in the event.

This blog was in charge of Annapur Narabo's constant.

________________________________________